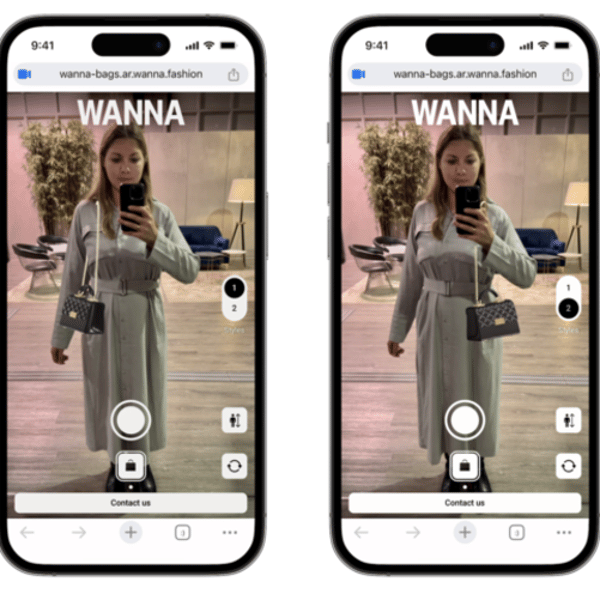

Wanna has been gradually expanding its virtual try-on (VTO) tech and the latest upgrade is its shoulder try-on feature, a new addition to the Bags Virtual Try-On experience.

The company, which was formerly owned by Farfetch but is now part of Perfect Corp, said the feature lets shoppers see how a bag looks when worn on the shoulder, complementing the existing crossbody try-on.

It “allows customers to replicate the real in-store experience by trying on the bag in different ways, virtually style the bag with their outfits, assess its proportions, and make confident purchasing decisions anytime, anywhere”.

It’s undeniably interesting and shows that even an item that’s effectively carried, rather than being worn in the same way a garment is, can still benefit from VTO tech. Apart from a potential buyer not being able to tell how heavy it is or whether their essential items might fit in it, the VTO now gives them all the information they might need to make a decision about its visual impact.

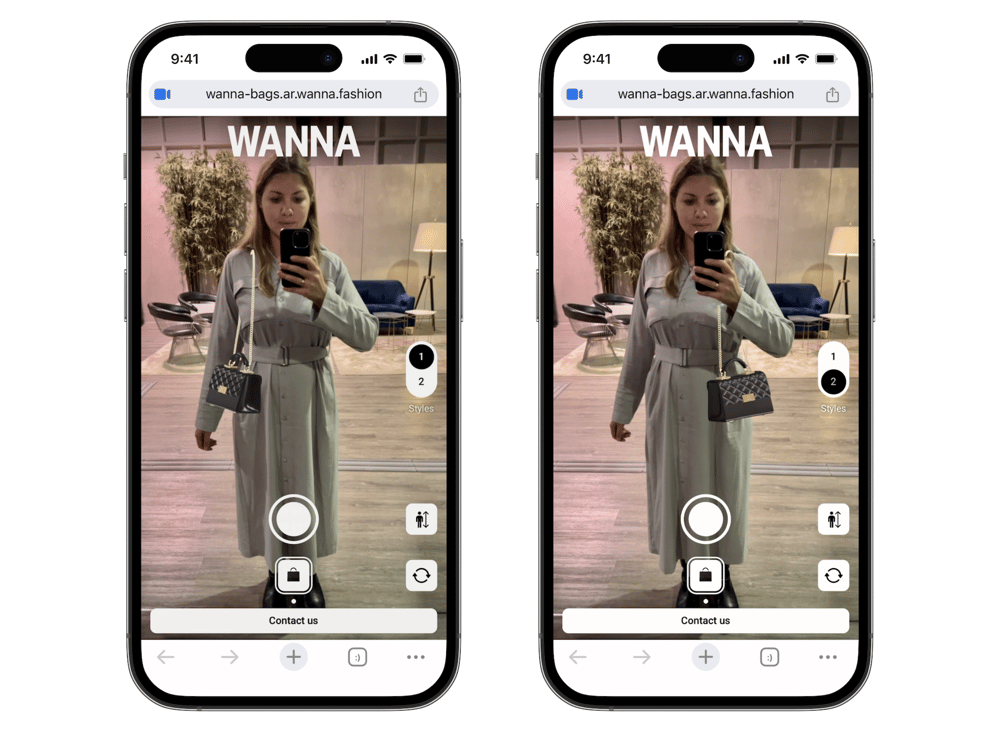

Wanna said that developing a realistic and stable shoulder virtual try-on presented a unique technical challenge: ensuring the bag remains correctly positioned even as users turn sideways to check proportions and outfit matching in the mirror. It addressed this with a proprietary AI-powered engine built to perform in real-time.

It offers real-time pose estimation via “precise 3D body tracking with low latency is essential for natural bag placement”. The system uses “highly optimised neural architectures specifically designed for mobile devices, balancing speed, accuracy, and energy efficiency to deliver real-time 3D pose and joint estimation – at frame rates fast enough to keep up with user movement”.

The company added that one of the hardest challenges in AR is determining when a virtual object should be partially hidden behind real-world elements like arms or hands. Its AI “handles these scenarios with intelligent segmentation and depth-aware modelling, ensuring realistic layering and preventing unnatural overlaps”.

And it works in selfie mode, mirror view, and side perspectives, “maintaining correct positioning and scale across camera modes”.

Behind the tech is a proprietary dataset of 487,000 training images, including 169,000 manually labelled, 127,000 AI-synthesised, and 190,000 pseudo-labelled samples. The large-scale, diverse dataset — spanning different body types, lighting conditions, and angles — “was critical in training the system to deliver accurate and visually consistent results across product styles and real-world usage scenarios”.

Wanna also “fine-tuned the shoulder try-on experience based on extensive user research with luxury shoppers”.

The new VTO experience is optimised for digital marketing, with shareable links that can be embedded into newsletters, Instagram, WeChat, and TikTok campaigns, to drive interaction and product visibility. And it’s now available via SDK integration.

Copyright © 2025 FashionNetwork.com All rights reserved.