For just over two years, technology leaders at the forefront of developing artificial intelligence had made an unusual request of lawmakers. They wanted Washington to regulate them.

The tech executives warned lawmakers that generative A.I., which can produce text and images that mimic human creations, had the potential to disrupt national security and elections, and could eventually eliminate millions of jobs.

A.I. could go “quite wrong,” Sam Altman, the chief executive of OpenAI, testified in Congress in May 2023. “We want to work with the government to prevent that from happening.”

But since President Trump’s election, tech leaders and their companies have changed their tune, and in some cases reversed course, with bold requests of government to stay out of their way, in what has become the most forceful push to advance their products.

In recent weeks, Meta, Google, OpenAI and others have asked the Trump administration to block state A.I. laws and to declare that it is legal for them to use copyrighted material to train their A.I. models. They are also lobbying to use federal data to develop the technology, as well as for easier access to energy sources for their computing demands. And they have asked for tax breaks, grants and other incentives.

The shift has been enabled by Mr. Trump, who has declared that A.I. is the nation’s most valuable weapon to outpace China in advanced technologies.

On his first day in office, Mr. Trump signed an executive order to roll back safety testing rules for A.I. used by the government. Two days later, he signed another order, soliciting industry suggestions to create policy to “sustain and enhance America’s global A.I. dominance. ”

Tech companies “are really emboldened by the Trump administration, and even issues like safety and responsible A.I. have disappeared completely from their concerns,” said Laura Caroli, a senior fellow at the Wadhwani AI Center at the Center for Strategic and International Studies, a nonprofit think tank. “The only thing that counts is establishing U.S. leadership in A.I.”

Many A.I. policy experts worry that such unbridled growth could be accompanied by, among other potential problems, the rapid spread of political and health disinformation; discrimination by automated financial, job and housing application screeners; and cyberattacks.

The reversal by the tech leaders is stark. In September 2023, more than a dozen of them endorsed A.I. regulation at a summit on Capitol Hill organized by Senator Chuck Schumer, Democrat of New York and the majority leader at the time. At the meeting, Elon Musk warned of “civilizational risks” posed by A.I.

In the aftermath, the Biden administration started working with the biggest A.I. companies to voluntarily test their systems for safety and security weaknesses and mandated safety standards for the government. States like California introduced legislation to regulate the technology with safety standards. And publishers, authors and actors sued tech companies over their use of copyrighted material to train their A.I. models.

(The New York Times has sued OpenAI and its partner, Microsoft, accusing them of copyright infringement regarding news content related to A.I. systems. OpenAI and Microsoft have denied those claims.)

But after Mr. Trump won the election in November, tech companies and their leaders immediately ramped up their lobbying. Google, Meta and Microsoft each donated $1 million to Mr. Trump’s inauguration, as did Mr. Altman and Apple’s Tim Cook. Meta’s Mark Zuckerberg threw an inauguration party and has met with Mr. Trump numerous times. Mr. Musk, who has his own A.I. company, xAI, has spent nearly every day at the president’s side.

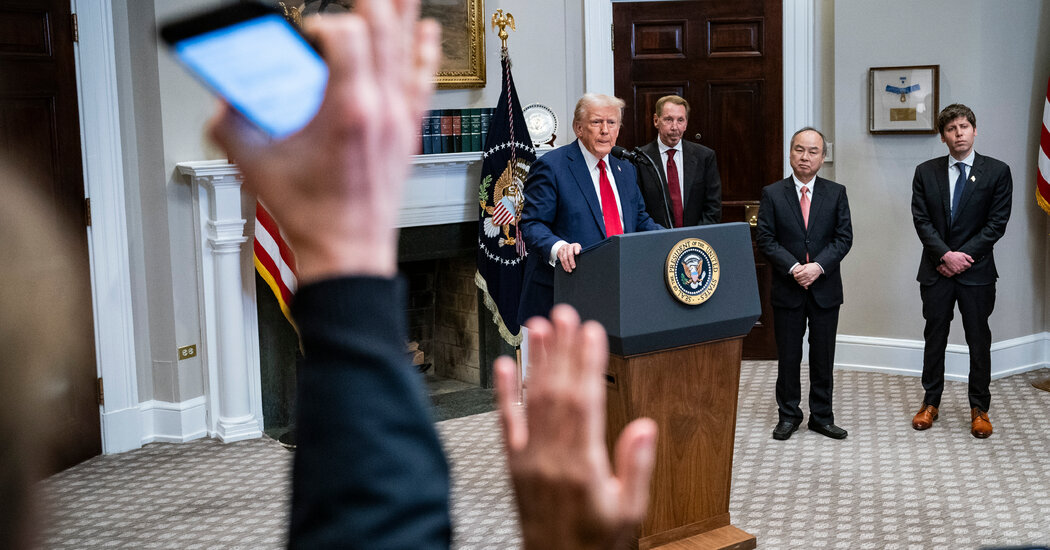

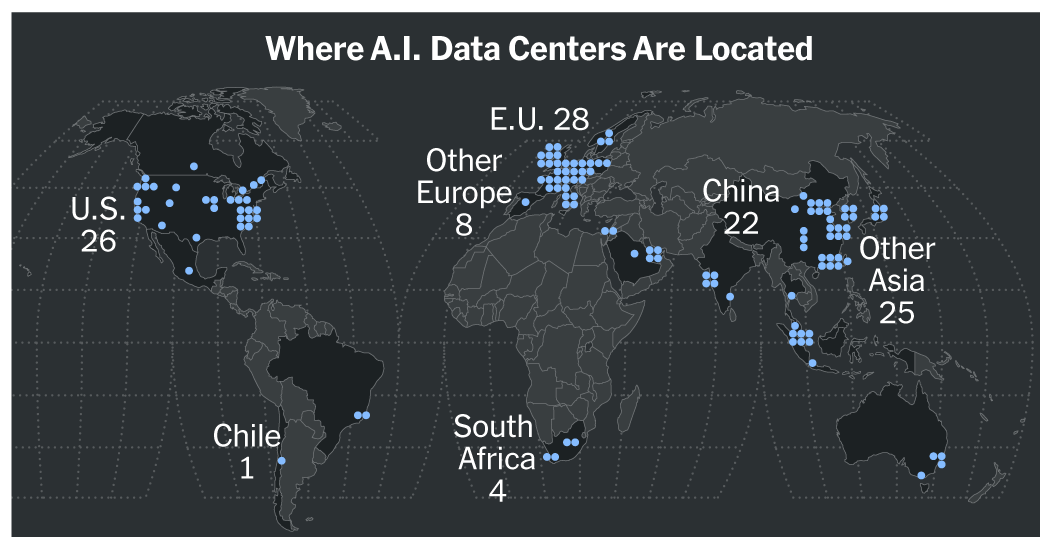

In turn, Mr. Trump has hailed A.I. announcements, including a plan by OpenAI, Oracle and SoftBank to invest $100 billion in A.I. data centers, which are huge buildings full of servers that provide computing power.

“We have to be leaning into the A.I. future with optimism and hope,” Vice President JD Vance told government officials and tech leaders last week.

At an A.I. summit in Paris last month, Mr. Vance also called for “pro-growth” A.I. policies, and warned world leaders against “excessive regulation” that could “kill a transformative industry just as it’s taking off.”

Now tech companies and others affected by A.I. are offering responses to the president’s second A.I. executive order, “Removing Barriers to American Leadership in Artificial Intelligence,” which mandated development of a pro-growth A.I policy within 180 days. Hundreds of them have filed comments with the National Science Foundation and the Office of Science and Technology Policy to influence that policy.

OpenAI filed 15-pages of comments, asking for the federal government to pre-empt states from creating A.I. laws. The San Francisco-based company also invoked DeepSeek, a Chinese chatbot created for a small fraction of the cost of U.S.-developed chatbots, saying it was an important “gauge of the state of this competition” with China.

If the Chinese developers “have unfettered access to data and American companies are left without fair use access, the race for A.I. is effectively over,” OpenAI said, requesting that the U.S. government turn over data to feed into its systems.

Many tech companies also argued that their use of copyrighted works for training A.I. models was legal and that the administration should take their side. OpenAI, Google and Meta said they believed they had legal access to copyrighted works like books, films and art for training.

Meta, which has its own A.I. model, called Llama, pushed the White House to issue an executive order or other action to “clarify that the use of publicly available data to train models is unequivocally fair use.”

Google, Meta, OpenAI and Microsoft said their use of copyrighted data was legal because the information was transformed in the process of training their models and was not being used to replicate the intellectual property of rights holders. Actors, authors, musicians and publishers have argued that the tech companies should compensate them for obtaining and using their works.

Some tech companies have also lobbied the Trump administration to endorse “open source” A.I., which essentially makes computer code freely available to be copied, modified and reused.

Meta, which owns Facebook, Instagram and WhatsApp, has pushed hardest for a policy recommendation on open sourcing, which other A.I. companies, like Anthropic, have described as increasing the vulnerability to security risks. Meta has said open source technology speeds up A.I. development and can help start-ups catch up with more established companies.

Andreessen Horowitz, a Silicon Valley venture capital firm with stakes in dozens of A.I. start-ups, also called for support of open source models, which many of its companies rely on to create A.I. products.

And Andreessen Horowitz gave the starkest arguments against new regulations for A.I. Existing laws on safety, consumer protection and civil rights are sufficient, the firm said.

“Do prohibit the harms and punish the bad actors, but do not require developers to jump through onerous regulatory hoops based on speculative fear,” Andreessen Horowitz said in its comments.

Others continued to warn that A.I. needed to be regulated. Civil rights groups called for audits of systems to ensure they do not discriminate against vulnerable populations in housing and employment decisions.

Artists and publishers said A.I. companies needed to disclose their use of copyright material and asked the White House to reject the tech industry’s arguments that their unauthorized use of intellectual property to train their models was within the bounds of copyright law. The Center for AI Policy, a think tank and lobbying group, called for third-party audits of systems for national security vulnerabilities.

“In any other industry, if a product harms or negatively hurts consumers, that project is defective and the same standards should be applied for A.I.,” said K.J. Bagchi, vice president of the Center for Civil Rights and Technology, which submitted one of the requests.